по Красноярску

в течение 30 дней

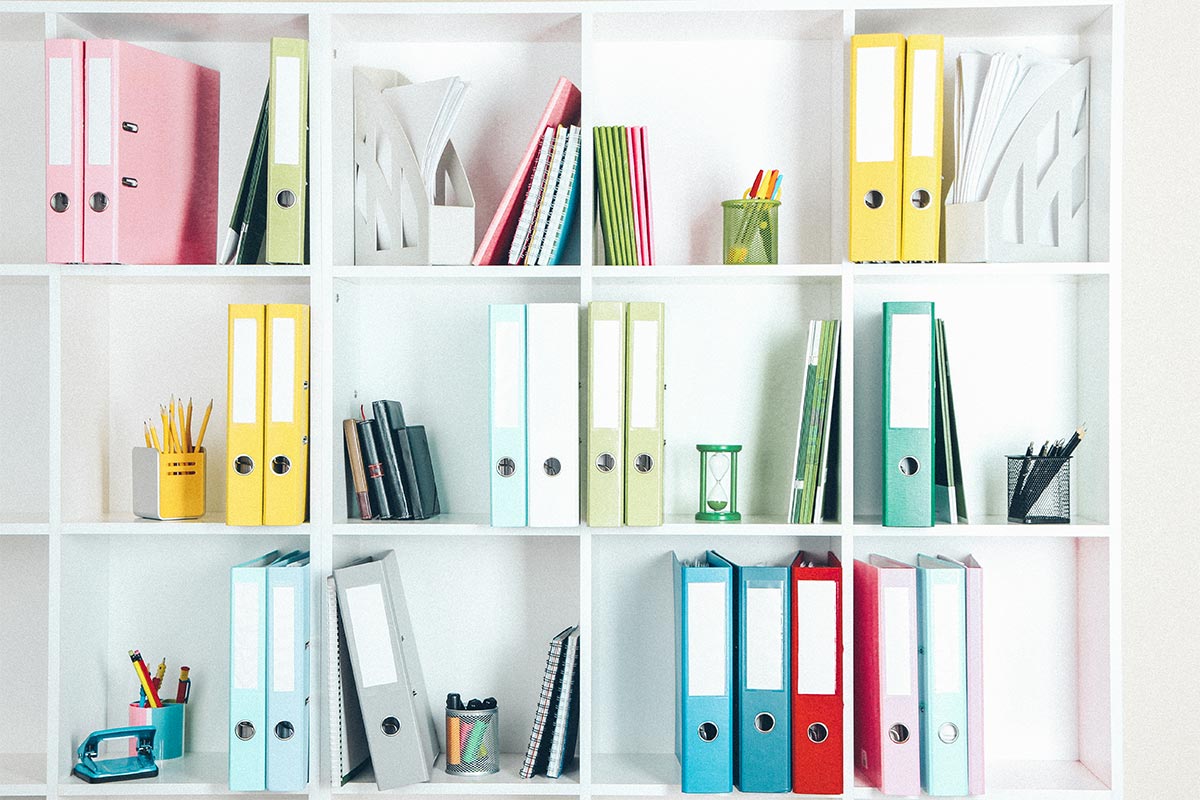

Компания ДИК — ваш помощник в офисе и дома. Делать покупки теперь станет намного легче: вы найдете у нас широкий ассортимент канцтоваров, бумажных изделий, офисной и бытовой техники, электроники и средств бытовой химии, а также сувениров и подарков.

Компания ДИК предлагает сотрудничество по хозяйственному и канцелярскому снабжению Вашей организации.

Самые востребованные и качественные канцтовары вы можете приобрести у нас. В нашем ассортименте товары для офиса, школьные принадлежности. Мы предлагаем нашим покупателям широчайший ассортимент канцелярских товаров оптом и в розницу от самых надежных и проверенных годами производителей в сочетании с приемлемыми ценами.

Мы можем предложить вам оптовые и розничные поставки носителей информации, фототоваров, расходных материалов для фотопечати, элементов питания, а также всевозможных сопутствующих товаров.

Поставляя товары от эконом до бизнес-класса, нам очень важно находиться в диалоге с нашими клиентами и тщательно следить за тем, чтобы соотношение «цена-качество» было оптимальным. Прислушиваясь к мнению наших клиентов, мы постоянно расширяем ассортимент.

Мы поможем вам выбрать самую подходящую продукцию, оформить заказ, доставить товар.